When it was revealed that Meta had used a dataset of pirated books to train its latest AI model, Llama, Australian authors were furious. Works by writers including Liane Moriarty, Tim Winton, Melissa Lucashenko, Christos Tsiolkas and many others had been scraped from the online shadow library LibGen without permission.

It was just the latest in a series of incidents where published books have been fed into commercial AI systems without the knowledge of their creators, and without any credit or compensation.

Our new report, Australian Authors’ Sentiment on Generative AI, co-authored with Shujie Liang and Tessa Barrington, offers the first large-scale empirical study of how Australian authors and illustrators feel about this rapidly evolving technology. It reveals just how widespread the concern is.

Unsurprisingly, most Australian authors do not want their work used to train AI systems. But this is not only about payment. It is about consent, trust and the future of their profession.

A clear rejection

In late 2024, we surveyed over 400 members of the Australian Society of Authors, the national peak body for writers and illustrators. We asked about their use of AI, their understanding of how generative models are trained, and whether they would agree to their work being used for training – with or without compensation.

79% said they would not allow their existing work to be used to train AI models, even if they were paid. Almost as many – 77% – said the same about future work.

Among those open to payment, half expected at least $A1,000 per work. A small number nominated figures in the tens or hundreds of thousands.

But the dominant response, from both established and emerging authors, was a firm “no”.

This presents a serious roadblock for those hoping publishers might broker blanket licensing agreements with AI firms. If most authors are unwilling to grant permission under any terms, then standard contract clauses or opt-in models are unlikely to deliver a practical or ethical solution.

Income loss and a shrinking profession

Authors are not just concerned about how their past work is used. They are also worried about what generative AI means for their future.

70% of respondents believe AI is likely to displace income-generating work for authors and illustrators. Some already reported losing jobs or being offered lower rates based on assumptions that AI tools would cut costs.

This fear compounds an already difficult economic reality. For many, writing is sustained only through other jobs or a partner’s support. As previous research has shown, most Australian authors earn well below the national average. In 2022, the average income writers earned from their work was $18,200 per annum.

Generative AI risks further eroding the already fragile foundation on which Australia’s literary culture depends. If professional writers cannot make a living and new voices cannot see a viable path into the industry, the pipeline of Australian storytelling will shrink.

Viktoriia Hnatiuk/Shutterstock

More than a copyright issue

At first glance, this might seem like a technical or legal issue, concerning rights management and royalty payments. But our findings show the objections run much deeper.

An overwhelming 91% of respondents said it was unfair for their work to be used to train AI models without permission or compensation. More than half believed AI tools could plausibly mimic their creative style. This raised concerns not only about unauthorised use, but also about imitation and displacement.

For many authors, their work is more than just intellectual property. It represents their voice, their identity and years of creative labour, often undertaken with little financial return.

The idea that a machine could replicate that work without consent, credit or payment is not only unsettling; for many, it feels like a fundamental violation of creative ownership.

This is not simply a case of authors being hesitant to engage with emerging technologies. Our findings suggest a more informed and considered stance. Most respondents had a moderate or strong understanding of how generative AI models are trained. They also made clear distinctions between tools that support creativity and those designed to replace it entirely.

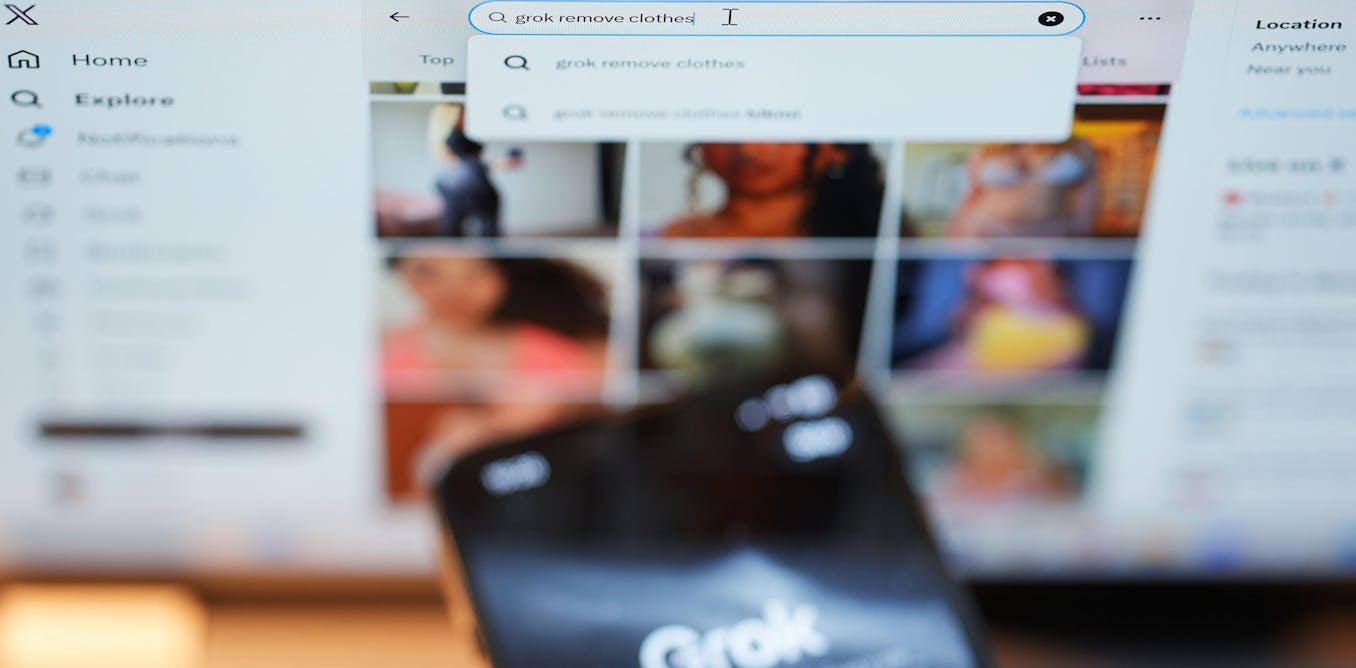

What they lacked was basic information. 80% of respondents did not know whether their work had already been used in AI training. This absence of transparency is a major source of frustration, even for well-informed professionals.

Without clear information, informed consent is impossible. And without consent, even the most innovative AI applications will be viewed with suspicion.

The publishing and tech industries cannot expect trust from creators while keeping them in the dark about how their work is used.

A sustainable future far from guaranteed

Generative AI is already reshaping the creative landscape, but the path ahead remains uncertain.

Our findings reveal a fundamental dilemma. If most Australian authors will not grant permission for their work to be used in AI training, even if compensation is offered, the prospect of negotiated agreements between AI companies, publishers and authors is unlikely.

What we are seeing is not just a policy gap. It reflects a deeper breakdown in trust. There is a growing belief among authors that the value of their creative work is being eroded by systems built on its use.

The widespread rejection of licensing models points to a looming impasse. If developers proceed without consent when authors are refusing to participate, it will be difficult to build the shared foundations that a sustainable creative economy requires.

Whether that gap can be bridged is still an open question. But if writers cannot see a viable or respected place for themselves, the long-term consequences for Australia’s cultural life will be significant.

If Australia wants a fair and forward-looking creative sector, it cannot afford to leave its authors out of the conversation.

The post “New research reveals Australian authors say no to AI using their work – even if money is on the table” by Paul Crosby, Senior Lecturer, Department of Economics, Macquarie University was published on 05/23/2025 by theconversation.com

-2.png)