Boston Dynamics has been at the forefront of legged robotics for decades, with Spot being one of their most advanced creations. In their latest video titled “Stepping Up | Reinforcement Learning with Spot | Boston Dynamics,” viewers are taken behind the scenes with Paul Domanico, a Robotics Engineer on the Spot Locomotion team.

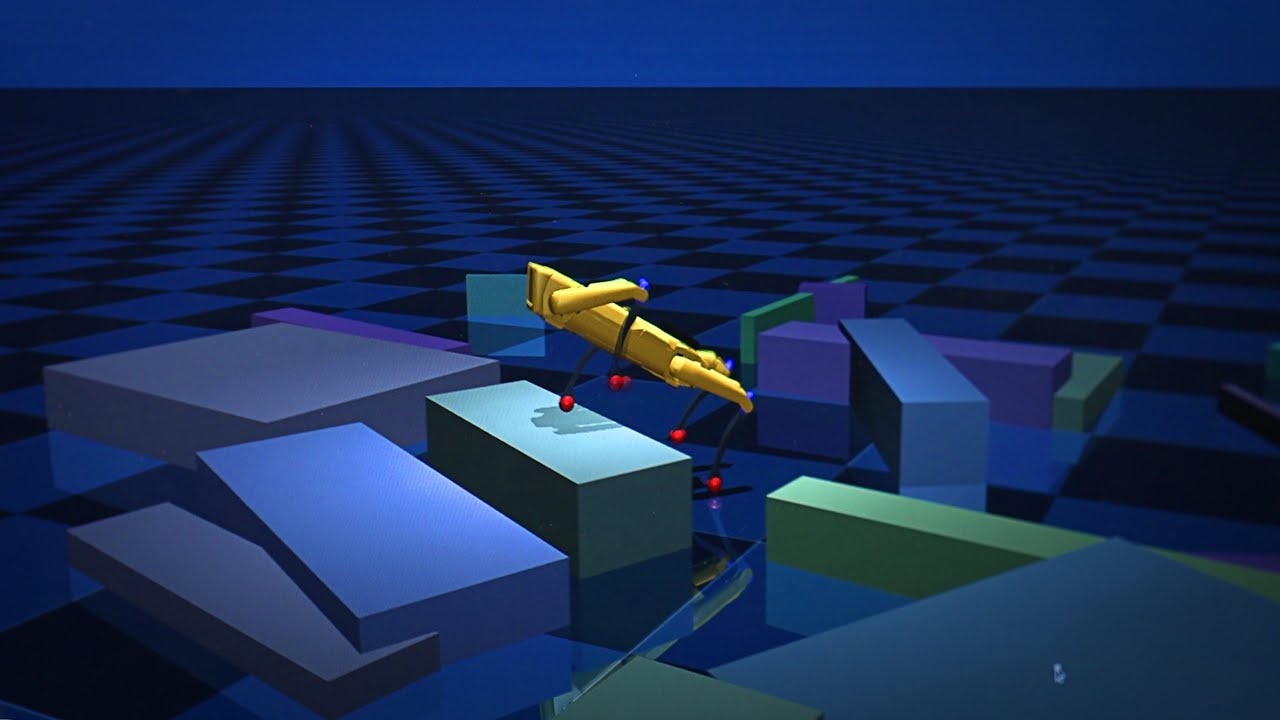

In the video, Domanico discusses the team’s use of reinforcement learning to enhance Spot’s movement capabilities, making the robot more agile and adaptable to various environments. By combining reinforcement learning with model predictive control, Spot is able to navigate complex terrains with ease, making split-second decisions to adjust its movements accordingly.

With the release of version 4.0, Spot’s locomotion abilities have been significantly improved, thanks to the integration of these advanced algorithms. The video showcases how Spot’s new neural network updates hundreds of times a second, improving the robot’s reliability and performance across different scenarios.

Overall, the video highlights the future potential of reinforcement learning in robotics, not only for Spot but for all robots in Boston Dynamics’ lineup. By leveraging data-driven algorithms, robots can become more dexterous, agile, and capable in various real-world applications. This hybrid approach of combining traditional control systems with reinforcement learning sets the stage for groundbreaking advancements in the field of robotics.

Watch the video by Boston Dynamics

Author Video Description

In release 4.0, we advanced Spot’s locomotion abilities thanks to the power of reinforcement learning. Paul Domanico, Robotics Engineer at Boston Dynamics talks through how Spot’s hybrid approach of combining reinforcement learning with model predictive control creates an even more stable robot in the most antagonistic environments.

Learn more about Spot’s locomotion control system: https://bostondynamics.com/blog/starting-on-the-right-foot-with-reinforcement-learning/

Video Transcript

Every single day there are robots moving and every single day they’re doing crazy things in our office and it’s so cool to be able to see that just day in and day out I’m Paul demonico I’m a robotics engineer on the spot Locomotion team we’re a team of roboticists and software

Engineers and we’re responsible for making spot move essentially anywhere in the world Boston Dynamics has been doing legged robotics for decades now and that’s largely based on our ability to understand and our ability to model these robots really model predictive control is at the root of how we have

Been able to get as far as we have if you look at Leed Locomotion is here at bosson Dynamics we have a really really good understanding of rigid body Dynamics we have great models that we can run very quickly and that we think are very accurate for how the robot will

In fact move in reality but there are parts of the problem that are very very hard to solve they’re very very hard to model or they’re very hard to model efficiently so that you can run it in real time control something like how the robot is going to move its lag we

Understand the Dynamics of that leg and how we should move it but let’s say how the robot should step through the world there’s a bunch of ways that the robot could sequence its leg how long should it swing that leg how long should it hold the leg in contact where in the

World should the robot step it’s this very high-dimensional space and these are problems where as Engineers as scientists it’s really hard to come up with a good solution now with reinforcement learning as long as we’re able to simulate these hard problems there’s a class of problems that we

Should be able to tackle reinforcement learning is a subfield of machine learning at the heart of reinforcement learning is data it’s a generating data that basically explains how the model interact the environment and then be using that data to train an agent in reinforcement learning our model is an

Agent it’s going to learn through its experiences in whatever environment we put it in reinforcement learning for robotics is that’s largely in simulation so an agent experiences things a robot would in simulation and the reinforcement learning algorithm is going to do two things one it’s going to explore a range of possible solutions

That the robot could have in that environment and it’s also going to try to find the optimal solution what is the best way that robot should interact with the environment one of the main things we’re trying to do now is we’re trying to find this balance of when can we use

Model predictive control when we have models that we think work well for the problem we have and then when can we use reinforcement learning when the models may be perhaps too hard to run hundreds of times a second or just very difficult to actually write so we’re trying to

Find this hybrid approach where we have a system that we’ve like written the models for and then we have a system we’ve generated the data that describes and we’re trying to train systems that will then perform an optimal action based on that data and interplay with

Our models and we think that that is going to give us the best performance for spot Locomotion right now we’ve seen some really exciting results using machine learning and specifically using machine learning this hybrid approach where we’re taking advantage of the controllers that work really well for us

Now and replacing some of the hard parts of the problems so something like when the robot is like slipping or determining where to step there’s a lot of split-second decisions that the robot needs to make if it’s slipping and the F slide out from the robot it’s generally

A good thing for the robot to step as fast as possible and to recover but if you imagine the robot is stepping down or stepping up or stepping over something you can’t necessarily step just as fast as possible because you need to be able to get the foot to a

Good location the robot’s able to take quick steps when it needs to but also really be careful and intentional about stepping over things stepping in good locations and it seems to be able to balance these Solutions in ways that we were better than writing ourselves with our latest software release every

Customer every user of a spot robot is immediately going to get access to the benefits of these new algorithms because this is Now the default behavior for all spot Locomotion whenever you’re using a spot there is now going to be updating a neural network hundreds of times a

Second which is going to be impacting the control system and it’s going to make it more reliable and more capable to Traverse a wide set of environments there’s a saying in robotics that there’s no such thing as a good model there’s only useful ones so no model is entirely accurate and if

Things become too complex or we want to do totally new behaviors that we don’t know how to write ourselves we think we can use the data and use reinforcement learning to enable new capabilities on spot but also all our robots and not just Locomotion things like manipulation

Being dextrous and agile in the world we existent as humans is going to be more capable using these Algorithms

Video “Stepping Up | Reinforcement Learning with Spot | Boston Dynamics” was uploaded on 03/19/2024 to Youtube Channel Boston Dynamics