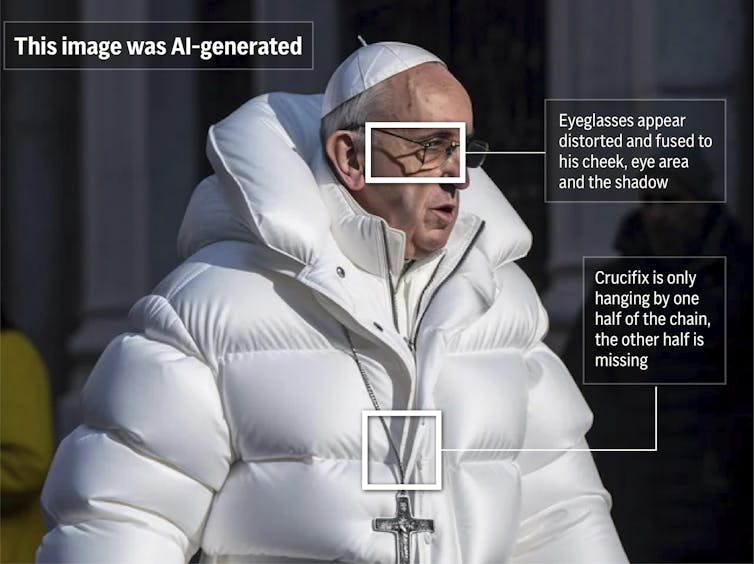

In the age of generative artificial intelligence (GenAI), the phrase “I’ll believe it when I see it” no longer stands. Not only is GenAI able to generate manipulated representations of people, but it can also be used to generate entirely fictitious people and scenarios.

Read more:

The use of deepfakes can sow doubt, creating confusion and distrust in viewers

GenAI tools are affordable and accessible to all, and AI-generated images are becoming ubiquitous. If you’ve been doom-scrolling through your news or Instagram feeds, chances are you’ve scrolled past an AI-generated image without even realizing it.

As a computer science researcher and PhD candidate at the University of Waterloo, I’m increasingly concerned by my own inability to discern what’s real from what’s AI-generated.

My research team conducted a survey where nearly 300 participants were asked to classify a set of images as real or fake. The average classification accuracy of participants was 61 per cent in 2022. Participants were more likely to correctly classify real images than fake ones. It’s likely that accuracy is much lower today thanks to the rapidly improving GenAI technology.

We also analyzed their responses using text mining and keyword extraction to learn the common justifications participants provided for their classifications. It was immediately apparent that, in a generated image, a person’s eyes were considered the telltale indicator that the image was probably AI-generated. AI also struggled to produce realistic teeth, ears and hair.

But these tools are constantly improving. The telltale signs we could once use to detect AI-generated images are no longer reliable.

Improving images

Researchers began exploring the use of GANs for image and video synthesis in 2014. The seminal paper “Generative Adversarial Nets” introduced the adversarial process of GANs. Although this paper does not mention deepfakes, it was the springboard for GAN-based deepfakes.

Some early examples of GenAI art which used GANs include the “DeepDream” images created by Google engineer Alexander Mordvintsev in 2015.

But in 2017, the term “deepfake” was officially born after a Reddit user, whose username was “deepfakes,” used GANs to generate synthetic celebrity pornography.

THE CANADIAN PRESS/Ryan Remiorz

In 2019, software engineer Philip Wang created the “ThisPersonDoesNotExist” website, which used GANs to generate realistic-looking images of people. That same year, the release of the deepfake detection challenge, which sought new and improved deepfake detection models, garnered widespread attention and led to the rise of deepfakes.

Read more:

How to combat the unethical and costly use of deepfakes

About a decade later, one of the authors of the “Generative Adversarial Nets” paper — Canadian computer scientist Yoshua Bengio — began sharing his concerns about the need to regulate AI due to the potential dangers such technology could pose to humanity.

Bengio and other AI trailblazers signed an open letter in 2024, calling for better deepfake regulation. He also led the first International AI Safety Report, which was published at the beginning of 2025.

Hao Li, deepfake pioneer and one of the world’s top deepfake artists, conceded in a manner eerily reminiscent of Robert Oppenheimer’s famous “Now I Am Become Death” quote:

“This is developing more rapidly than I thought. Soon, it’s going to get to the point where there is no way that we can actually detect ‘deepfakes’ anymore, so we have to look at other types of solutions.”

The new disinformation

Big tech companies have indeed been encouraging the development of algorithms that can detect deepfakes. These algorithms commonly look for the following signs to determine if content is a deepfake:

- Number of words spoken per sentence, or the speech rate (the average human speech rate is 120-150 words per minute),

- Facial expressions, based on known co-ordinates of the human eyes, eyebrows, nose, lips, teeth and facial contours,

- Reflections in the eyes, which tends to be unconvincing (either missing or oversimplified),

- Image saturation, with AI-generated images being less saturated and containing a lower number of underexposed pixels compared to pictures taken by an HDR camera.

(The Associated Press)

But even these traditional deepfake detection algorithms suffer several drawbacks. They are usually trained on high-resolution images, so they may fail at detecting low-resolution surveillance footage or when the subject is poorly illuminated or posing in an unrecognized way.

Despite flimsy and inadequate attempts at regulation, rogue players continue to use deepfakes and text-to-image AI synthesis for nefarious purposes. The consequences of this unregulated use range from political destabilization at a national and global level to the destruction of reputations caused by very personal attacks.

Disinformation isn’t new, but the modes of propagating it are constantly changing. Deepfakes can be used not only to spread disinformation — that is, to posit that something false is true — but also to create plausible deniability and posit that something true is false.

It’s safe to say that in today’s world, seeing will never be believing again. What might once have been irrefutable evidence could very well be an AI-generated image.

The post “As generative AI becomes more sophisticated, it’s harder to distinguish the real from the deepfake” by Andreea Pocol, PhD candidate, Computer Science, University of Waterloo was published on 03/25/2025 by theconversation.com