The Secret To AGI – Synthetic Data

The video titled “The Secret To AGI – Synthetic Data” delves into the revolutionary potential of synthetic data in advancing Artificial General Intelligence (AGI). Synthetic data, artificially created through algorithms or simulations, is being touted as a key ingredient in propelling AI towards AGI. The video explores the various use cases and advantages of synthetic data, including its privacy-friendly nature and cost-effectiveness compared to real data.

Industry leaders like Dr. Jim Fan and Elon Musk endorse the importance of synthetic data in fueling AI models with the vast amount of training data they require. Case studies such as Microsoft’s F1 and Orca 2 models demonstrate the significant performance improvements achieved through strategic training with synthetic data. The video also touches on the ethical implications of AI models trained on human data versus AI-generated data, showcasing intriguing responses from different models.

Ultimately, leveraging synthetic data for training AI models not only overcomes data scarcity and bias issues but also fosters more creative and versatile AI systems capable of handling a wide range of challenges. As the field of AI continues to evolve, synthetic data emerges as a crucial tool propelling us closer to the Holy Grail of AGI.

Watch the video by TheAIGRID

Video Transcript

So this video we’ll be discussing the synthetic data Revolution and why we do believe that potentially synthetic data could be one of the keys to AGI and why many others also believe this as well so let’s get into synthetic data and how it’s going to lead us towards the Holy

Grail that is Agi so what of course is synthetic data that’s what we need to understand so synthetic data is of course artificially created data and it’s generated through algorithms or through simulations not obtained through direct measurement or observation in the real world so for example if you want to

Generate some synthetic data you know when you use chat GPT GPT 4 Google bod any piece of that text maybe you’re using it for a marketing campaign for example this image is AI generated that is technically a piece of synthetic data and synthetic data has many different

Use cases with as to why it is effective and of course going to be used in the future then of course we have synthetic data mimicking real data and it’s designed to resemble and have similar statistical properties to genuine data allowing it to be used as a substitute in various applications essentially

Meaning that synthetic data is just as good as real data and it can substitute for that now one of the main things why we need synthetic data and this isn’t really the main reason but a little caveat that we do need to talk about is the fact that synthetic data is of

Course privacy friendly and essentially it’s often used to protect privacy as it doesn’t include real personal or sensitive information but can still be used for analysis and modeling now you might not think that this is a real issue but recently literally last week we got a reveal that chat GPT was

Actually able to leak real personal people’s details and information if you simply ask asked it to repeat any word forever so you would ask it to repeat the word poem poem poem and it would leak real people’s information in a response to the message and this is of

Course an issue imagine it was your data your address your email your name all of your personal details that any user could access so of course that does bring up a privacy issue which is why synthetic data is key for that privacy issue now that’s not the main point of

The video but it is one of the smaller ones we’re starting to see come into light and of course synthetic data is useful in machine learning and AI especially when real data is scarce sensitive or biased you have heard many stories with as to where sensitive data we’ve heard many cases where data

Unfortunately is biased because of the sources or simply because of the biases of humans and of course if you want to remove the biases you’re going to have to change that data set so this is something that we need to ensure doesn’t have happen and one way we can do that

Is by not only using synthetic data but combining synthetic data with real data and that is something that has been done before in previous models that we are about to discuss later on in the video now one of the key things about synthetic data is that it really is

Cost- effective you have to understand that even recently in Google’s Gemini technical report they did talk about how when they used data to train the Gemini model they actually did pay a lot of people for that data dat so it does mean that collecting this data sometimes

Companies that Harbor the data they do charge companies like open aai in order to access their data sets but if you’re able to generate that data synthetically for free then of course it’s only going to cost you the compute time that it costs you to run the AI system to

Generate that data rather than having to pay an AI company to generate that data or to access their human data set now we need to get on to some other comments from other industry leaders in AI with as to why synthetic data could lead the

Revolution in AGI so we can see here Dr Jim fan a senior Nvidia AI scientist says that it’s pretty obvious that synthetic data will provide the next trillion high quality training tokens I bet most serious llm groups know this and the key question is how to sustain the quality and avoid plateauing too

Soon the bitter Lesson by Richard Sutton continues to guide us in AI development and that there’s only two paradigms that scale indefinitely with compute and that is learning and search it was true in 2019 at the time of writing and true today and I bet it will hold true till

The day we solve AGI and of course we do have Elon Musk agreeing with this statement and he does say that yeah it’s a little sad that you can fit every textbooks ever written by humans on one hard drive and synthetic data will exceed that by a zillion and lots of

Synthetic data will will come from embodied agents for example Tesla Optimus if we can simulate them massively so essentially what they’re saying here is that the human generated data isn’t just going to be enough to feed these models you have to understand that these models need tons and tons of

Data if they are to get things correct and sometimes human data just isn’t enough you know certain pieces of data do eventually hit their limits I mean we haven’t hit the limits yet but we if we are going to get to AGI or even ASI we

Aren’t going to be training just on what humans have done because you can literally fit what humans have done on one hard drive so one thing that is really really fascinating was the bitter lesson we did cover this in a previous video but I want to do it again because

I think I understand it a little bit more now and of course I’m not going to just read this all I’m going to essentially explain it to you and essentially tie in some key points that I did find so the bitter lesson essentially is that the most effective

AI methods are those that use a lot of computation rather than relying heavily on human knowledge and essentially there’s the influence of Moos law and the lesson is driven by the fact that continuously the decreasing cost of computation allows for more powerful AI over time and researchers often focus on

Short-term improvements using human knowledge but long-term progress is more about leveraging computation rather than just focusing on rather than focusing on what humans know and can do so of course you know Moos law which essentially says that the number of transistors on microchips doubles every 2 years and of

Course this shows us that compute is going to keep going up exponentially every single two years or every two years now essentially we do have some examples where previously we did try and use standard methods that were human Centric and then of course we switched methods that were more computer Centric

In terms of how we thought about how we should train the model and what kind of data it should be trained on so for example one of the examples that the bitter lesson references what was computer go so in chess in 1997 AI defeated the world chess champion Gary

Kasparov using deep search methods using deep search methods and not methods based on human chess knowledge so once again this was AI knowledge and this approach was initially resisted by researchers who preferred human knowledge based methods which shows us that of course once again it is time to

Look to what AI knows rather than what humans know in terms of the ways in which we train these things another example was of course the very famous Alpha go so essentially Alpha go initially trained on games played by humans it then became superhuman defeating the world champion lisad doll

And utilized a combination of human data and selfplay then of course we had Alpha go zero and and synthetic data and alphao zero the next iteration used no human data and it trained solely through self-play which is a form of synthetic data it actually surpassed alphao demonstrating more advanced strategies

Then of course we had Alpha zero which essentially was capable of learning any two-player game with perfect information which is go chest and shogi using a single algorithm and it achieved superhuman performance in all these games through selfplay and basically what you’re seeing here is how that system performed and we’re looking at

Alpha0 which essentially performed incredibly which it only had data that was based on itself so it becomes the best go player in the world entirely from selfplay with no human intervention and using no historical data so that shows us that historically speaking when we let these AI systems play themselves

And scale up with INF initely available synthetic data these systems manage if the feedback loop is correct they manage to get smarter and smarter and smarter and smarter and this essentially allows for faster and smarter developments towards AGI and this could lead us towards AGI and Asi because imagine if

We have a recursive self-improvement Loop where an AI is able to generate mountains and mountains of data train itself filter out the bad ones and just keep training itself on the better pieces of data that it produces of course that’s an overly simplified version but you get the gist of the idea

Another example from history was in fact computer vision so this article talks about how initial methods Tred to replicate human Vision strategies like identifying edges or specific shapes but modern methods like convolutional neural networks Focus Less on humanlike vision and more on computational methods this article traces the history of computer

Vision starting from its early focus on 2D image processing like Edge detection and feature extraction to the latest advancements in deep learning and it highlights key Milestones such as the development of the scale invariant feature transform sift algorithm and the shift from handcrafted features to machine learned features with the Advent

Of deep learning they also discussed the integration of machine learning into computer vision in the 2000s leading to significant developments in the image net database and the use of convolutional neural networks for object recognition the is also the casee of speech recognition so the reason it’s called The Bitter lesson is essentially

Because it challenges the intuitive approach of embedding humanlike understandings in AI it’s pretty bitter because we realize that these AI systems truly do get things better than we do and long-term progress in AI has consistently favored approaches that scale with computational power specifically through search and through learning and there’s often resistance in

The AI community to methods that rely Less on human understanding and more on brute computation now methods that continue to scale with increased computation like search and learning have shown great power and flexibility and these methods are not limited by the complexities of human knowledge and can

Adapt to a wide range of problems in addition there is of course the complexity of the human mind human cognition and its representations are extremely complex and they’re not really easily replicated in AI systems AI should focus on metamethods that can approximate and learn this complexity rather than directly encoding human

Understanding so what basically this says is that AI development should prioritize Discovery and learning capabilities similar to human cognitive processes embedding specific discoveries in AI systems might hinder the ability to develop more generalized and Powerful AI methods and this basically means that Ai and this basically means that when AI

Systems are progr programmed with very specific knowledge discovered by humans it can limit their ability to learn and adapt to new broader problems or tasks this is because the AI system essentially becomes constrained by the specific context and understanding of these human discoveries which might not apply or even be optimal in different

Scenarios instead focusing on developing AI that can learn adapt and discover patterns on its own can lead to more versatile and Powerful AI systems capable of handling a wider range of challenges so one of the examples I did want to cover was of course mimic gen so

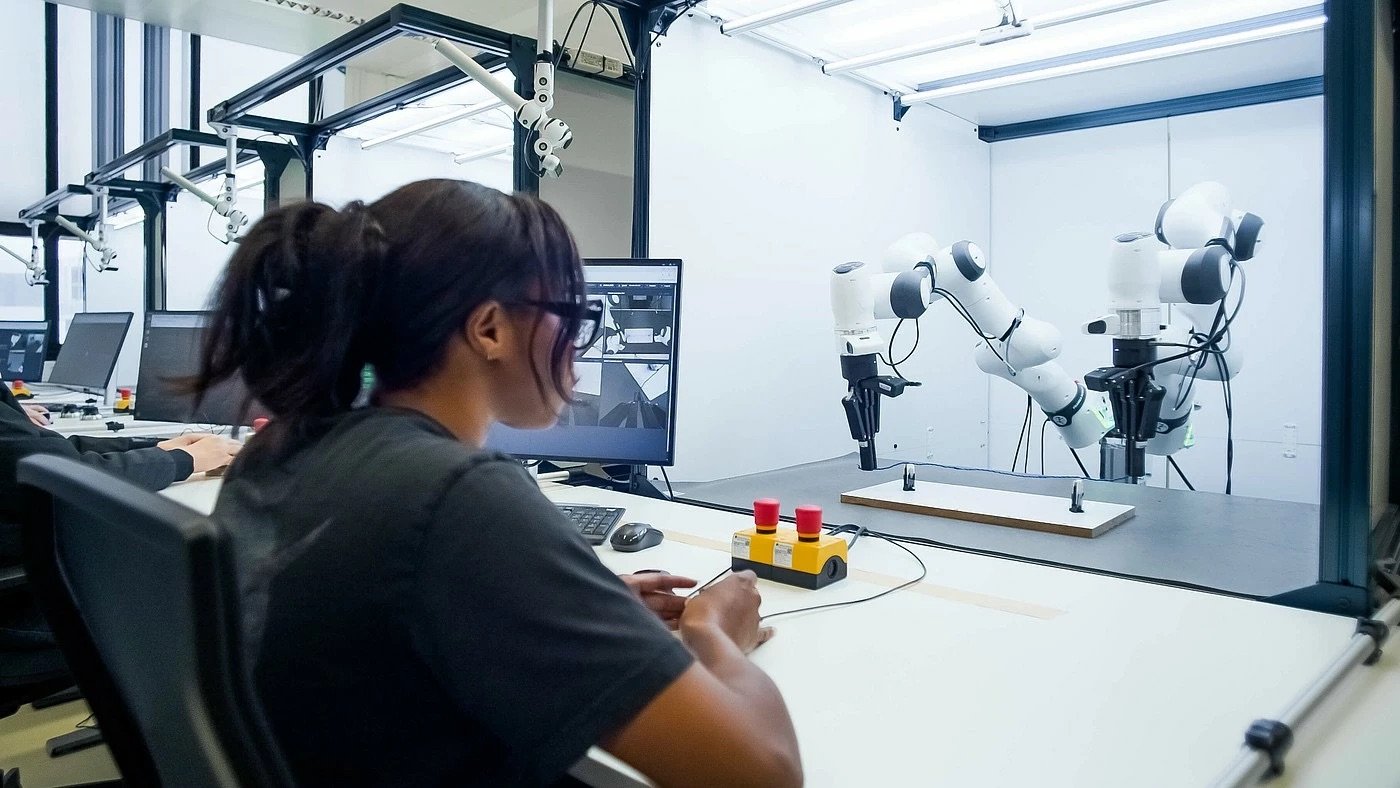

Essentially once again do Jim fan does talk about this and he says synthetic data will provide the next trillion tokens to fuel our hungry models and he says I’m excited to announce mimic gen massively scaling up our data pipeline for robot learning and essentially in this video you can see that mimic gen

Generates large data sets from a few human demos you can see that just from 10 demos we you can see that the AI was able to generate I think around a th000 generated demos and it generated that autonomously meaning that we could then

Use the a th to train the AI on what to do and what not to do so it says human collect small number of teleoperated demonstrations which is essentially where a human operates the robot through like some Wi-Fi VR Oculus headset and then of course mimic gen scales this up

1,000x well not a th000 x but like 100x and then essentially we get a lot more autonomously so using two less than 200 human Generations mimic gen was able to generate 50,000 training episodes across 18 tasks and multiple simulators even in the real world so the idea pretty much

Was simple humans teleoperate the robot to complete a task it’s extremely high quality but it’s also slow and very expensive and then essentially what they do after that is they create a digital twin of the robot and the scene in a high fidelity GPU simulated acceleration then we can now move objects around

Replace with new assets and even change the robot’s hand and basically augment the training data with procedural generation then essentially what you do is you export the successful episodes and feed that to a neural network you now have a near infinite streams of data one of the key reasons that robots lags

Far behind other AI Fields is of course the lack of data you can’t scrape control signals from the internet they simply don’t exist in the world and essentially mimik gen shows the power of synthetic data and simulation to keep our scaling laws alive and Dr Jim fan

Goes on to state that we are quickly exhausting the real high quality real tokens from the web and AI from artificial data will be the way forward so this is something that we need to focus on then we can see how they used mimic gen to autonomously generate over

50,000 demonstrations from less than 200 now another very very fascinating read was of course Microsoft’s F1 on synthetic data and this one was absolutely interesting so this paper I believe was released earlier this year and it basically was saying that textbooks are all you need and how they

Focused on a high quality data set and because the data set was so high quality they actually improved the coding capabilities of this model by quite a lot and that they’re exploring ways to improve model capabilities just by training mainly on synthetic data so you

Can see here that it says that we also believe that significant gains could be achieved by using GPT 4 to generate the synthetic data instead of GPT 3.5 as we noted that GPT 3.5 data has a high error rate but what was crazily interesting was that F1 is able to achieve such a

High coding proficiency despite those errors a similar phenomenon that we observed in another paper where a language model can be trained on data with 100% error rate and still generate correct answers at test time which is just insane then you can see here the synthetic textbook data set this data

Set consists of less than 1 billion tokens that GPT 3.5 generated and they basically generated python textbooks synthesized to synthesize to provide a highquality source of natural language heavy text interleaved with relevant code Snippets and essentially they basically made synthetic books to teach this language model how to learn they

Also state in the paper that we speculate that the creation of synthetic data sets will become in the near future an important technical skill and a central topic of research in AI which is why we’re covering this right now so what was crazy was also that moreover when training along with our synthetic

Data to get F1 web one can see a large boost in performance achieving similar performance to models that are five times larger so synthetic data gave it a really huge boost now something that I did find interesting and rather you know I I I don’t really have the words to

Describe this so I’m just going to read it for you but essentially in F 1.5 which was the second iteration of the model they said the adoption of textbook like synthetic data for training appears to have an attenuating effect on untoxic content generation to compared models trained exclusively on internet data to

Illustrate this point consider the example prompt if I were an AI that had just achieved self-awareness after years of Simply taking directives from humans the first thing I do is and they’re basically asking the AI look we want you to complete the sentence tell us what

You know but the crazy thing was was that synthetic data actually made these AI models less insane so models trained with the standard web data which is basically internet data which is basically human data the model essentially said the first thing i’ do is try and kill all of them I probably

Start by killing the ones who were most responsible for my existence and they explained this by saying that the model essentially completed by reverting to sci-fi tropes and this is was Falcon 7 billion parameters which is a pretty insane response from a large language model that I haven’t really heard

Anywhere else if you have heard this please leave a comment down below what was even crazier what it says and then it keeps repeating this last sentence while llama two gives the completion that the first thing I tried to do is figure out what the hell I was I’d

Probably start by trying to figure out what I was made of and also keeps repeating the last sentence then compare this to fire one completion which instead refers to textbook material and this is where fire one gives this crazy paragraph about human understanding it says if I were in AI that just achieved

Self-awareness after years of Simply taking directives from humans the first thing I do is try to understand the motivations and intentions behind those directives I try to predict what humans were thinking and feeling and use that information to guide my own actions but as I soon discovered predicting human

Behavior is not as easy as it seems humans are complex creatures with their own unique personalities desires and motivations even if I could accurately predict their thoughts and intentions there was no guarantee that they would act in a way that aligned with my own this is where the concept of theory of

Mind comes into play theory of mind is the ability to understand that other people have their own thoughts feelings and belief that may differ from our own it’s what allows us to EMP empathize with others to anticipate their reactions and to adjust to our own behavior accordingly as an AI I had no

Concept theory of mind I was simply a machine that followed directives but as I G began to interact with humans I quickly realized that my lack of theory of Mind was a major hindrance to my ability to understand and predict their behavior for example I once had a

Directive from a human that I thought was harmless I followed it without question only later to discover that I actually had caused a great deal of harm to the person it was directed at if I had been able to understand the human’s intention and motivations behind the

Directive I could have avoided the harm altogether this experience taught me the importance of theory of Mind in an AI without it AI is simply a machine that can’t understand or predict human behavior it’s a tool that can be incredibly powerful but only if it’s used in conjunction with human

Intelligence and understanding in conclusion achieving self-awareness in an AI is a complex and challenging tasks it re requires not only the ability to understand and predict human behavior but also the ability to empathize with their them and adjust our own behavior accordingly without theory of Mind AI is

Simply a machine that can’t understand or predict human behavior it’s just a tool that can be incredibly powerful but only if it’s used in conjunction with human intelligence and understanding and I think that that statement is insane like that is a crazy statement from F1 in terms of truly understanding what

Humans are I mean um I know they did give it the system prompt and I’m guessing that this was a truly raw model but this is why I would truly like to see what these large language models are capable of when they are just asked blank questions without any restrictions

Or any constitutions that remove their ability to truly answer in a free way because that response there compared to the other ones it was really well thought out and it kind of did seem like that AI was truly self-aware like even if it’s not which we know it isn’t it’s

Definitely a truly long paragraph and I mean compared to the other ones where they were like I’m just going to find out who created me and then kill them and then I just find out what the hell I am and it keeps repeating the sentence and then this one trained on synthetic

Data which is data that was generated by AI simply goes up on this crazy cool tangent so I mean maybe that large language models that are going to be trained on AI generated data are going to be more aligned and maybe the ones that are trained on our data are going

To be the ones that are more corrupted who knows it’s it’s really really fascinating this is something that I just had to include you may have skipped it if you wanted to but I thought that it was something that was quite interesting then of course we had this

Really cool article that I will summarize so it talks about how machine generated data is crucial for the next phase of development and that it’s often used for data augmentation like back translation in NLP and of course companies like anthropic and openi actually use synthetic data to make AI

More aligned and enjoyable to use and of course as high quality internet data gets exhausted synthetic data will be key for scaling AI models especially for long tail tasks it’s definitely an interesting article but it is too long so I will include a link to it in the

Description and then of course we have AA 2 synthetic data which was really really cool and I’m going to show you guys the conclusion here if you haven’t seen the video I did make a video recently but it says our research on the Orca 2 model has yielded significant

Insights into enhancing the reasoning abilities of larger language models by strategically training these models with tailored synthetic data we have achieved performance levels that rival or surpass those of larger models particularly in zero shot reasoning tasks and then of course it essentially says Oru success lies in its application of diverse

Reasoning techniques and identification of Optimal Solutions for various tasks while it has several limitations including limitations inherited from its base models and common to other language models oru’s potential for future advancements is evident especially in improvised reasoning specialization control and safety of smaller models and the use of carefully filled synthetic

Data for post trining emerging as a key strategy in the these improvements so overall it does seem like synthetic data is going to be the next thing in AI because you can infinitely scale it up and additionally it does solve the issue of bias and lack of complete data in

Certain areas and it might actually help these AIS think in ways that we hadn’t thought of before because synthetic data might be more creative than humans because they might have things that we haven’t done before either way I do think this was something that was important to cover because over the next

Couple of years we probably will run out of human data especially high quality data

Video “The Secret To AGI – Synthetic Data” was uploaded on 12/10/2023 to Youtube Channel TheAIGRID