You may have seen ads by Apple promoting its new Clean Up feature that can be used to remove elements in a photo. When one of these ads caught my eye this weekend, I was intrigued and updated my software to try it out.

The feature has been available in Australia since December for Apple customers with certain hardware and software capabilities. It’s also available for customers in New Zealand, Canada, Ireland, South Africa, the United Kingdom and the United States.

The tool uses generative artificial intelligence (AI) to analyse the scene and suggest elements that might be distracting. You can see those highlighted in the screenshot below.

T.J. Thomson

You can then tap the suggested element to remove it or circle elements to delete them. The device then uses generative AI to try to create a logical replacement based on the surrounding area.

Easier ways to deceive

Smartphone photo editing apps have been around for more than a decade, but now, you don’t need to download, pay for, or learn to use a new third-party app. If you have an eligible device, you can use these features directly in your smartphone’s default photo app.

Apple’s Clean Up joins a number of similar tools already offered by various tech companies. Those with Android phones might have used Google’s Magic Editor. This lets users move, resize, recolour or delete objects using AI. Users with select Samsung devices can use their built-in photo gallery app to remove elements in photos.

There have always been ways – analogue and, more recently, digital – to deceive. But integrating them into existing software in a free, easy-to-use way makes those possibilities so much easier.

Using AI to edit photos or create new images entirely raises pressing questions around the trustworthiness of photographs and videos. We rely on the vision these devices produce in everything from police body and traffic cams to insurance claims and verifying the safe delivery of parcels.

If advances in tech are eroding our trust in pictures and even video, we have to rethink what it means to trust our eyes.

How can these tools be used?

The idea of removing distracting or unwanted elements can be attractive. If you’ve ever been to a crowded tourist hotspot, removing some of the other tourists so you can focus more on the environment might be appealing (check out the slider below for an example).

But beyond removing distractions, how else can these tools be used?

Some people use them to remove watermarks. Watermarks are typically added by photographers or companies trying to protect their work from unauthorised use. Removing these makes the unauthorised use less obvious but not less legal.

Others use them to alter evidence. For example, a seller might edit a photo of a damaged good to allege it was in good condition before shipping.

As image editing and generating tools become more widespread and easier to use, the list of uses balloons proportionately. And some of these uses can be unsavoury.

AI generators can now make realistic-looking receipts, for example. People could then try to submit these to their employer to get reimbursed for expenses not actually incurred.

Read more:

Can you spot a financial fake? How AI is raising our risks of billing fraud

Can anything we see be trusted anymore?

Considering these developments, what does it mean to have “visual proof” of something?

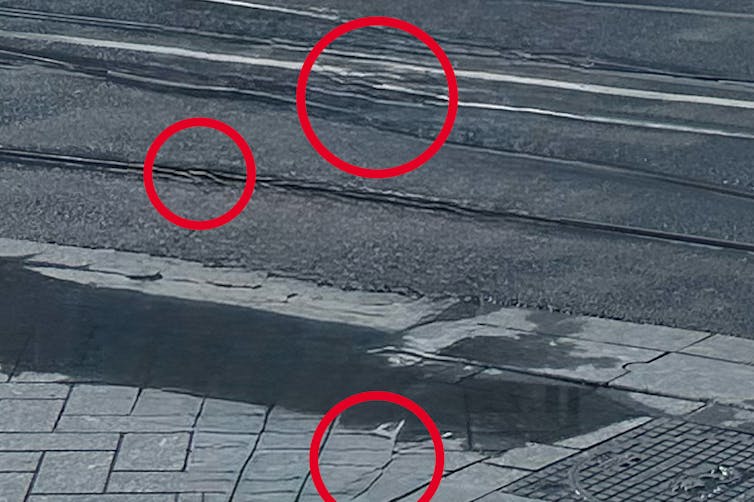

If you think a photo might be edited, zooming in can sometimes reveal anomalies where the AI has stuffed up. Here’s a zoomed-in version of some of the areas where the Clean Up feature generated new content that doesn’t quite match the old.

T.J. Thomson

It’s usually easier to manipulate one image than to convincingly edit multiple images of the same scene in the same way. For this reason, asking to see multiple outtakes that show the same scene from different angles can be a helpful verification strategy.

Seeing something with your own eyes might be the best approach, though this isn’t always possible.

Doing some additional research might also help. For example, with the case of a fake receipt, does the restaurant even exist? Was it open on the day shown on the receipt? Does the menu offer the items allegedly sold? Does the tax rate match the local area’s?

Manual verification approaches like the above obviously take time. Trustworthy systems that can automate these mundane tasks are likely to grow in popularity as the risks of AI editing and generation increase.

Likewise, there’s a role for regulators to play in ensuring people don’t misuse AI technology. In the European Union, Apple’s plan to roll out its Apple Intelligence features, which include the Clean Up function, was delayed due to “regulatory uncertainties”.

AI can be used to make our lives easier. Like any technology, it can be used for good or bad. Being aware of what it’s capable of and developing your visual and media literacies is essential to being an informed member of our digital world.

The post “Tools like Apple’s photo Clean Up are yet another nail in the coffin for being able to trust our eyes” by T.J. Thomson, Senior Lecturer in Visual Communication & Digital Media, RMIT University was published on 04/10/2025 by theconversation.com