It’s becoming increasingly difficult to make today’s artificial intelligence (AI) systems work at the scale required to keep advancing. They require enormous amounts of memory to ensure all their processing chips can quickly share all the data they generate in order to work as a unit.

The chips that have mostly been powering the deep-learning boom for the past decade are called graphics processing units (GPUs). They were originally designed for gaming, not for AI models where each step in their thinking process must take place in well under a millisecond.

Each chip contains only a modest amount of memory, so the large language models (LLMs) that underpin our AI systems must be partitioned across many GPUs connected by high-speed networks. LLMs work by training an AI on huge amounts of text, and every part of them involves moving data between chips – a process that is not only slow and energy-intensive but also requires ever more chips as models get bigger.

For instance, OpenAI used some 200,000 GPUs to create its latest model, GPT-5, around 20 times the number used in the GPT-3 model that powered the original version of Chat-GPT three years ago.

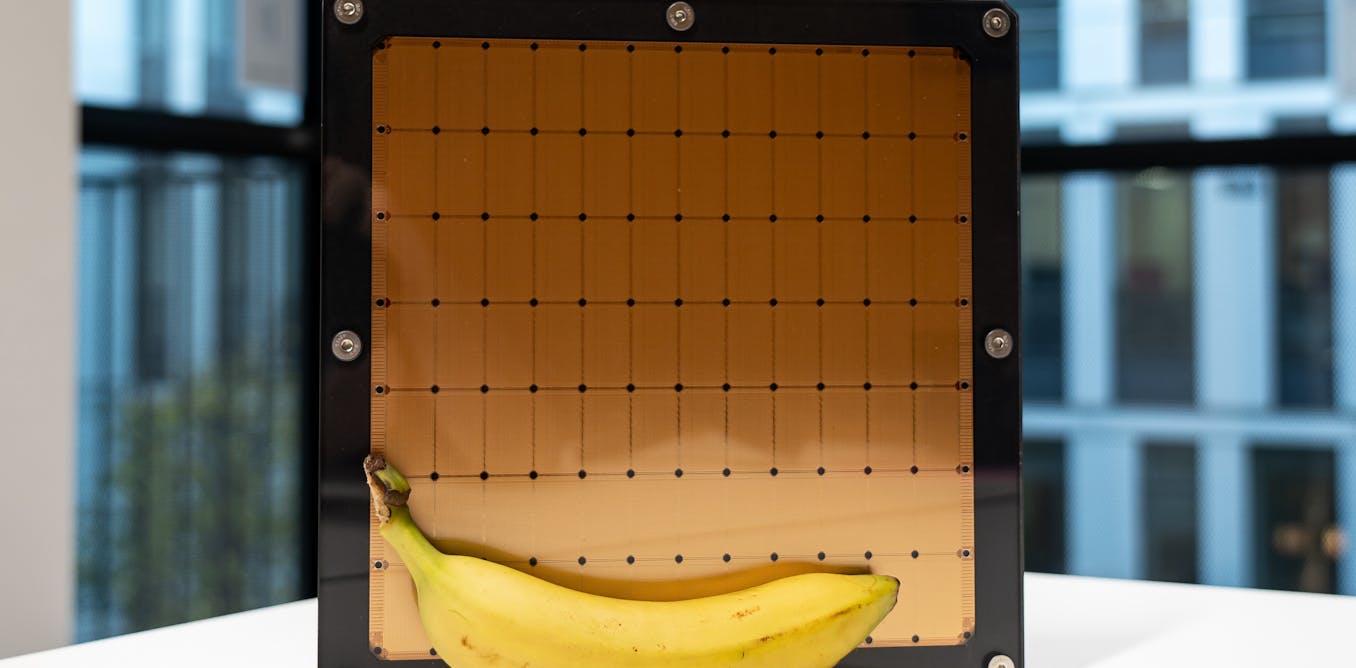

To address the limits of GPUs, companies such as California-based Cerebras have started building a different kind of chip called wafer-scale processors. These are the size of a dinner plate, about five times bigger than GPUs, and only recently became commercially viable. Each contains vast on-chip memory and hundreds of thousands of individual processors (known as cores).

The idea behind them is simple. Instead of coordinating dozens of small chips, keep everything on one piece of silicon so data does not have to travel across networks of hardware. This matters because when an AI model generates an answer – a step known as inference – every delay adds up.

The time it takes the model to respond is called latency, and reducing that latency is crucial for applications that work in real-time, such as chatbots, scientific-analysis engines and fraud-detection systems.

Wafer-scale chips alone are not enough, however. Without a software system engineered specifically for their architecture, much of their theoretical performance gain simply never appears.

The deeper challenge

Wafer-scale processors have an unusual combination of characteristics. Each core has very limited memory, so there is a huge need for data to be shared within the chip. Cores can access their own data in nanoseconds, but there are so many cores on each chip over such a large area that reading memory on the far side of the wafer can be a thousand times slower.

Limits in the routing network on each chip also mean that it can’t handle all possible communications between cores at once. In sum, cores cannot access memory fast enough, cannot communicate freely, and ultimately spend most of their time waiting.

Brovko Serhii

We’ve recently been working on a solution called WaferLLM, a joint venture between the University of Edinburgh and Microsoft Research designed to run the largest LLMs efficiently on wafer-scale chips. The vision is to reorganise how an LLM runs so that each core on the chip mainly handles data stored locally.

In what is the first paper to explore this problem from a software perspective, we’ve designed three new algorithms that basically break the model’s large mathematical operations into much smaller pieces.

These pieces are then arranged so that neighbouring cores can process them together, handing only tiny fragments of data to the next core. This keeps information moving locally across the wafer and avoids the long-distance communication that slows the entire chip down.

We’ve also introduced new strategies for distributing different parts (or layers) of the LLM across hundreds of thousands of cores without leaving large sections of the wafer idle. This involves coordinating processing and communication to ensure that when one group of cores is computing, another is shifting data, and a third is preparing its next task.

These adjustments were tested on LLMs like Meta’s Llama and Alibaba’s Qwen using Europe’s largest wafer-scale AI facility at the Edinburgh International Data Facility. WaferLLM made the wafer-scale chips generate text about 100 times faster than before.

Compared with a cluster of 16 GPUs, this amounted to a tenfold reduction in latency, as well as being twice as energy efficient. So whereas some argue that the next leap in AI performance may come from chips designed specifically for LLMs, our results suggest you can instead design software that matches the structure of existing hardware.

In the near term, faster inference at lower cost raises the prospect of more responsive AI tools capable of evaluating many more hypotheses per second. This would improve everything from reasoning assistants to scientific-analysis engines. Even more data-heavy applications like fraud detection and testing ideas through simulations would be able to handle dramatically larger workloads without the need for massive GPU clusters.

The future

GPUs remain flexible, widely available and supported by a mature software ecosystem, so wafer-scale chips will not replace them. Instead, they are likely to serve workloads that depend on ultra-low latency, extremely large models or high energy efficiency, such as drug discovery and financial trading.

Meanwhile, GPUs aren’t standing still: better software and continuous improvements in chip design are helping them run more efficiently and deliver more speed. Over time, assuming there’s a need for even greater efficiency, some GPU architectures may also adopt wafer-scale ideas.

Simplystocker

The broader lesson is that AI infrastructure is becoming a co-design problem: hardware and software must evolve together. As models grow, simply scaling out with more GPUs will no longer be enough. Systems like WaferLLM show that rethinking the software stack is essential for unlocking the next generation of AI performance.

For the public, the benefits will not appear as new chips on shelves but as AI systems that will support applications that were previously too slow or too expensive to run. Whether in scientific discovery, public-sector services or high-volume analytics, the shift toward wafer-scale computing signals a new phase in how AI systems are built – and what they can achieve.

The post “These dinner-plate sized computer chips are set to supercharge the next leap forward in AI” by Luo Mai, Reader at the School of Informatics, University of Edinburgh was published on 11/20/2025 by theconversation.com