Video Friday is your weekly selection of awesome robotics videos, collected by your friends at IEEE Spectrum robotics. We also post a weekly calendar of upcoming robotics events for the next few months. Please send us your events for inclusion.

RoboCup German Open: 17–21 April 2024, KASSEL, GERMANY

AUVSI XPONENTIAL 2024: 22–25 April 2024, SAN DIEGO

Eurobot Open 2024: 8–11 May 2024, LA ROCHE-SUR-YON, FRANCE

ICRA 2024: 13–17 May 2024, YOKOHAMA, JAPAN

RoboCup 2024: 17–22 July 2024, EINDHOVEN, NETHERLANDS

Enjoy today’s videos!

USC, UPenn, Texas A&M, Oregon State, Georgia Tech, Temple University, and NASA Johnson Space Center are teaching dog-like robots to navigate craters of the moon and other challenging planetary surfaces in research funded by NASA.

[ USC ]

AMBIDEX is a revolutionary robot that is fast, lightweight, and capable of human-like manipulation. We have added a sensor head and the torso and the waist to greatly expand the range of movement. Compared to the previous arm-centered version, the overall impression and balance has completely changed.

[ Naver Labs ]

It still needs a lot of work, but the six-armed pollinator, Stickbug, can autonomously navigate and pollinate flowers in a greenhouse now.

I think “needs a lot of work” really means “needs a couple more arms.”

[ Paper ]

Experience the future of robotics as UBTECH’s humanoid robot integrates with Baidu’s ERNIE through AppBuilder! Witness robots understand language and autonomously perform tasks like folding clothes and object sorting.

[ UBTECH ]

I know the fins on this robot are for walking underwater rather than on land, but watching it move, I feel like it’s destined to evolve into something a little more terrestrial.

iRobot has a new Roomba that vacuums and mops—and at $275, it’s a pretty good deal.

Also, if you are a robot vacuum owner, please please remember to clean the poor thing out from time to time. Here’s how to do it with a Roomba:

[ iRobot ]

The video demonstrates the wave-basin testing of a 43 kg (95 lb) amphibious cycloidal propeller unmanned underwater vehicle (Cyclo-UUV) developed at the Advanced Vertical Flight Laboratory, Texas A&M University. The use of cyclo-propellers allows for 360 degree thrust vectoring for more robust dynamic controllability compared to UUVs with conventional screw propellers.

[ AVFL ]

Sony is still upgrading Aibo with new features, like the ability to listen to your terrible music and dance along.

[ Aibo ]

Operating robots precisely and at high speeds has been a long-standing goal of robotics research. To enable precise and safe dynamic motions, we introduce a four degree-of-freedom (DoF) tendon-driven robot arm. Tendons allow placing the actuation at the base to reduce the robot’s inertia, which we show significantly reduces peak collision forces compared to conventional motor-driven systems. Pairing our robot with pneumatic muscles allows generating high forces and highly accelerated motions, while benefiting from impact resilience through passive compliance.

Rovers on Mars have previously been caught in loose soils, and turning the wheels dug them deeper, just like a car stuck in sand. To avoid this, Rosalind Franklin has a unique wheel-walking locomotion mode to overcome difficult terrain, as well as autonomous navigation software.

[ ESA ]

Cassie is able to walk on sand, gravel, and rocks inside the Robot Playground at the University of Michigan.

Aww, they stopped before they got to the fun rocks.

[ Paper ] via [ Michigan Robotics ]

Not bad for 2016, right?

[ Namiki Lab ]

MOMO has learned the Bam Yang Gang dance moves with its hand dexterity. 🙂 By analyzing 2D dance videos, we extract detailed hand skeleton data, allowing us to recreate the moves in 3D using a hand model. With this information, MOMO replicates the dance motions with its arm and hand joints.

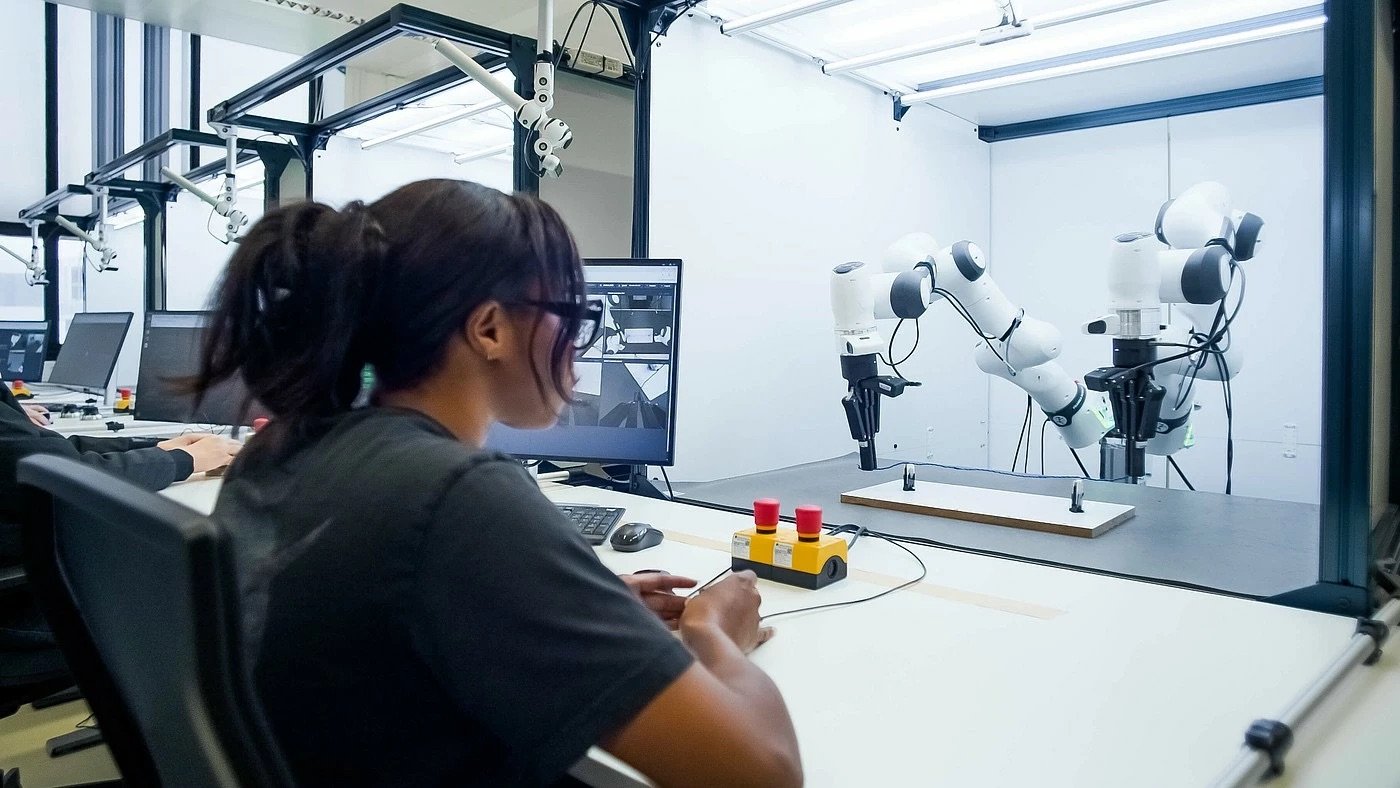

This UPenn GRASP SFI Seminar is from Eric Jang at 1X Technologies, on “Data Engines for Humanoid Robots.”

1X’s mission is to create an abundant supply of physical labor through androids that work alongside humans. I will share some of the progress 1X has been making towards general-purpose mobile manipulation. We have scaled up the number of tasks our androids can do by combining an end-to-end learning strategy with a no-code system to add new robotic capabilities. Our Android Operations team trains their own models on the data they gather themselves, producing an extremely high-quality “farm-to-table” dataset that can be used to learn extremely capable behaviors. I’ll also share an early preview of the progress we’ve been making towards a generalist “World Model” for humanoid robots.

[ UPenn ]

This Microsoft Future Leaders in Robotics and AI Seminar is from Chahat Deep Singh at the University of Maryland, on “Minimal Perception: Enabling Autonomy in Palm-Sized Robots.”

The solution to robot autonomy lies at the intersection of AI, computer vision, computational imaging, and robotics—resulting in minimal robots. This talk explores the challenge of developing a minimal perception framework for tiny robots (less than 6 inches) used in field operations such as space inspections in confined spaces and robot pollination. Furthermore, we will delve into the realm of selective perception, embodied AI, and the future of robot autonomy in the palm of your hands.

[ UMD ]

The post “Video Friday: LASSIE On the Moon” by Evan Ackerman was published on 04/05/2024 by spectrum.ieee.org