Imagine an AI model that can use a heart scan to guess what racial category you’re likely to be put in – even when it hasn’t been told what race is, or what to look for. It sounds like science fiction, but it’s real.

My recent study, which I conducted with colleagues, found that an AI model could guess whether a patient identified as Black or white from heart images with up to 96% accuracy – despite no explicit information about racial categories being given.

It’s a striking finding that challenges assumptions about the objectivity of AI and highlights a deeper issue: AI systems don’t just reflect the world – they absorb and reproduce the biases built into it.

Get your news from actual experts, straight to your inbox. Sign up to our daily newsletter to receive all The Conversation UK’s latest coverage of news and research, from politics and business to the arts and sciences. Join The Conversation for free today.

First, it’s important to be clear: race is not a biological category. Modern genetics shows there is more variation within supposed racial groups than between them.

Race is a social construct, a set of categories invented by societies to classify people based on perceived physical traits and ancestry. These classifications don’t map cleanly onto biology, but they shape everything from lived experience to access to care.

Despite this, many AI systems are now learning to detect, and potentially act on, these social labels, because they are built using data shaped by a world that treats race as if it were biological fact.

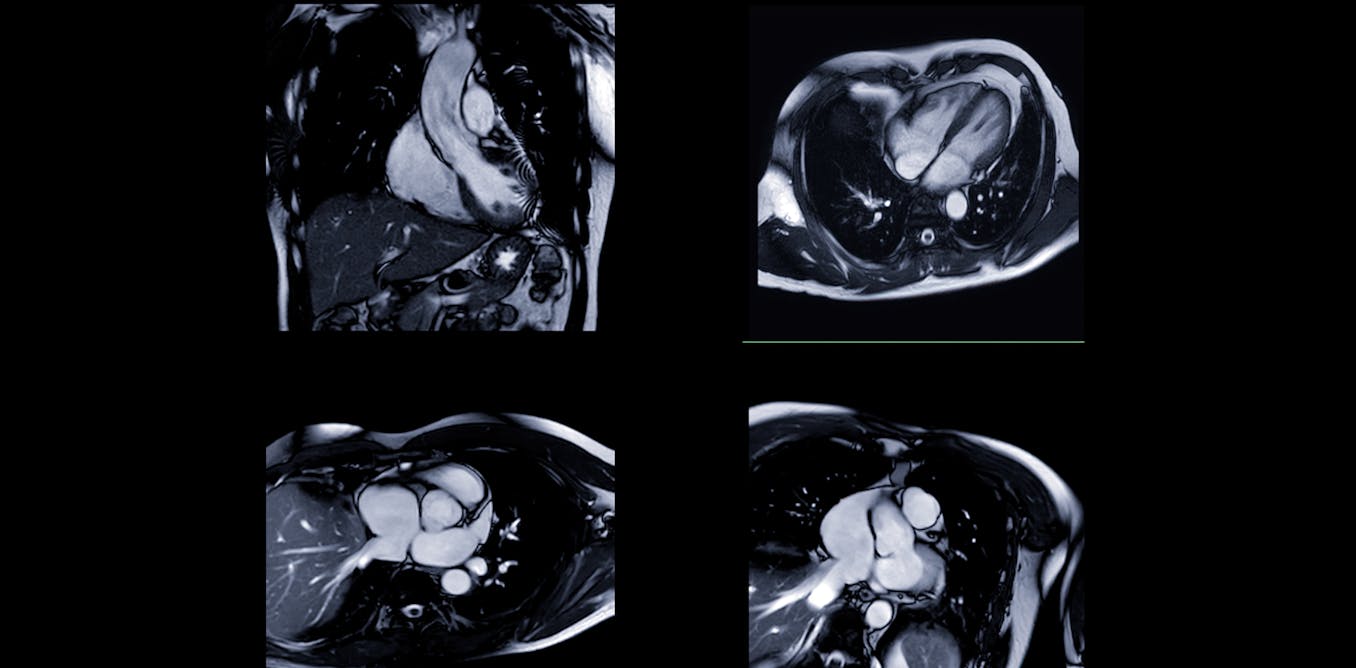

AI systems are already transforming healthcare. They can analyse chest X-rays, read heart scans and flag potential issues faster than human doctors – in some cases, in seconds rather than minutes. Hospitals are adopting these tools to improve efficiency, reduce costs and standardise care.

Bias isn’t a bug – it’s built in

But no matter how sophisticated, AI systems are not neutral. They are trained on real-world data – and that data reflects real-world inequalities, including those based on race, gender, age, and socioeconomic status. These systems can learn to treat patients differently based on these characteristics, even when no one explicitly programs them to do so.

One major source of bias is imbalanced training data. If a model learns primarily from lighter skinned patients, for example, it may struggle to detect conditions in people with darker skin.

Studies in dermatology have already shown this problem.

Even language models like ChatGPT aren’t immune: one study found evidence that some models still reproduce outdated and false medical beliefs, such as the myth that Black patients have thicker skin than white patients.

Sometimes AI models appear accurate, but for the wrong reasons – a phenomenon called shortcut learning. Instead of learning the complex features of a disease, a model might rely on irrelevant but easier to spot clues in the data.

Imagine two hospital wards: one uses scanner A to treat severe COVID-19 patients, another uses scanner B for milder cases. The AI might learn to associate scanner A with severe illness – not because it understands the disease better, but because it’s picking up on image artefacts specific to scanner A.

Now imagine a seriously ill patient is scanned using scanner B. The model might mistakenly classify them as less sick – not due to a medical error, but because it learned the wrong shortcut.

This same kind of flawed reasoning could apply to race. If there are differences in disease prevalence between racial groups, the AI could end up learning to identify race instead of the disease – with dangerous consequences.

In the heart scan study, researchers found that the AI model wasn’t actually focusing on the heart itself, where there were few visible differences linked to racial categories. Instead, it drew information from areas outside the heart, such as subcutaneous fat as well as image artefacts – unwanted distortions like motion blur, noise, or compression that can degrade image quality. These artefacts often come from the scanner and can influence how the AI interprets the scan.

In this study, Black participants had a higher-than-average BMI, which could mean they had more subcutaneous fat, though this wasn’t directly investigated. Some research has shown that Black individuals tend to have less visceral fat and smaller waist circumference at a given BMI, but more subcutaneous fat. This suggests the AI may have been picking up on these indirect racial signals, rather than anything relevant to the heart itself.

This matters because when AI models learn race – or rather, social patterns that reflect racial inequality – without understanding context, the risk is that they may reinforce or worsen existing disparities.

This isn’t just about fairness – it’s about safety.

Solutions

But there are solutions:

Diversify training data: studies have shown that making datasets more representative improves AI performance across groups – without harming accuracy for anyone else.

Build transparency: many AI systems are considered “black boxes” because we don’t understand how they reach their conclusions. The heart scan study used heat maps to show which parts of an image influenced the AI’s decision, creating a form of explainable AI that helps doctors and patients trust (or question) results – so we can catch when it’s using inappropriate shortcuts.

Treat race carefully: researchers and developers must recognise that race in data is a social signal, not a biological truth. It requires thoughtful handling to avoid perpetuating harm.

AI models are capable of spotting patterns that even the most trained human eyes might miss. That’s what makes them so powerful – and potentially so dangerous. It learns from the same flawed world we do. That includes how we treat race: not as a scientific reality, but as a social lens through which health, opportunity and risk are unequally distributed.

If AI systems learn our shortcuts, they may repeat our mistakes – faster, at scale and with less accountability. And when lives are on the line, that’s a risk we cannot afford.

The post “AI can guess racial categories from heart scans – what it means and why it matters” by Tiarna Lee, Doctoral Candidate, School of Biomedical Engineering & Imaging Sciences, King’s College London was published on 05/12/2025 by theconversation.com