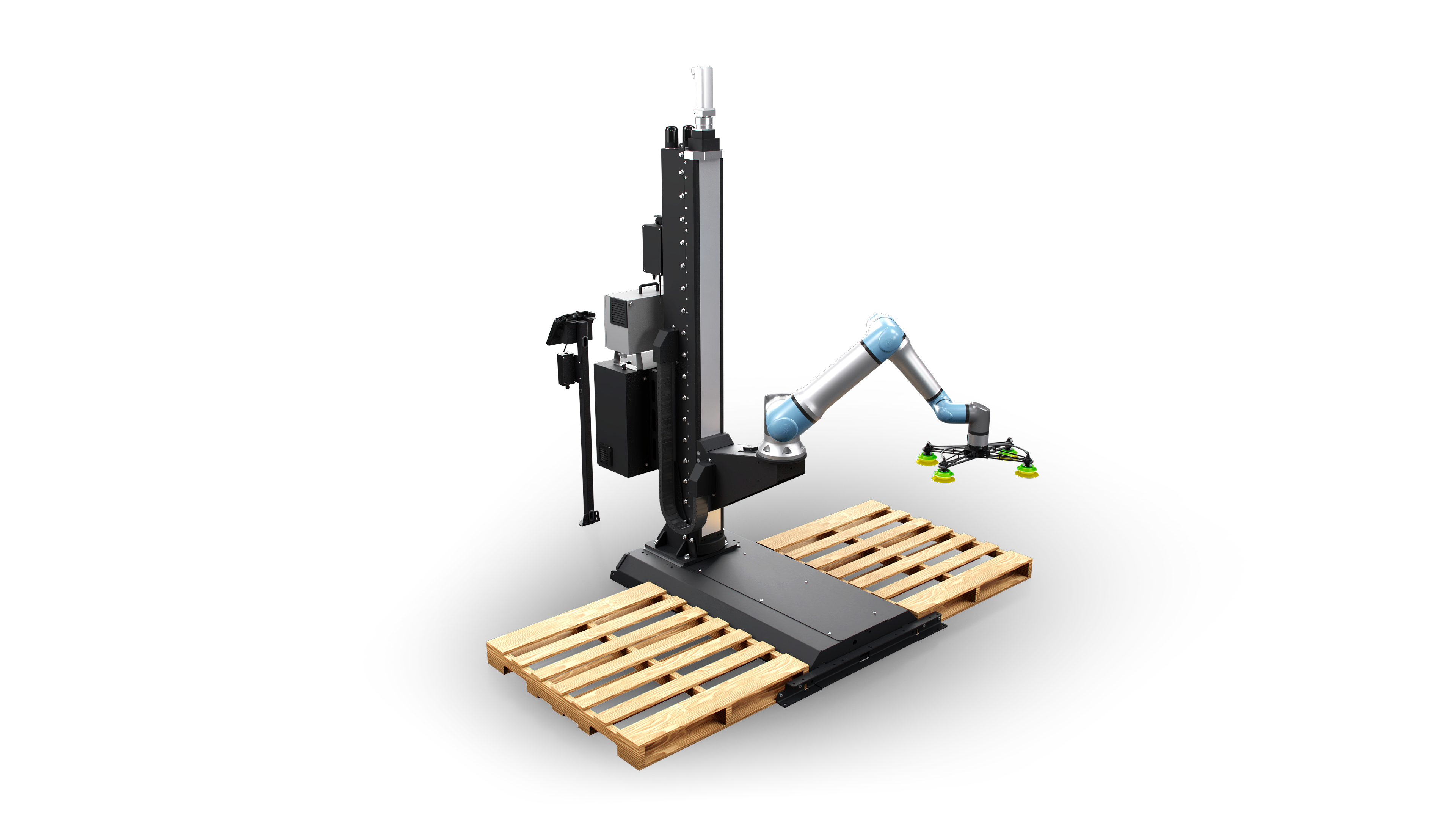

Smart toys with internet connections and AI-enabled robots, with capacities for sophisticated social interactions with children, are widely available today.

This is no doubt driven by rapid development in Artificial Intelligence, with impacts being widely felt. Companies are looking to enhance productivity and revenue, while governments are considering safety measures in their AI strategies.

Child-focused AI products include:

Parents and professionals working with children may feel pressure to purchase these or other products as alternatives to in-person activities like play dates, therapy or games — or wonder about their usefulness and potential roles in children’s lives.

Before charging ahead to a world of AI babysitters, machine therapists and teacher robots, we should consider this technology carefully to assess its abilities and appropriateness in children’s lives.

THE CANADIAN PRESS/Stephanie Marin

Informed choices

We must examine assumptions built into these products or how they are marketed and advocate for scientific approaches in assessing their efficacy.

Evidence-based approaches from psychology, children’s development and education and related fields can systematically test, observe and make recommendations for use of new technologies, without any vested commercial interests. In turn, this promotes informed choices for parents and professionals working with children.

This is critical with AI systems — systems that do not provide transparency into their decision-making processes. There are also concerns about data privacy and surveillance.

Read more:

We street-proof our kids. Why aren’t we data-proofing them?

What are some underlying assumptions either potentially suggested or directly asserted with these technologies and how they are marketed? Three are examined below.

Assumption 1: Human traits like curiosity, empathy or emotions like happiness, sadness, “heart” or compassion can be realized in a machine.

A person might assume that since AI products can respond with human-like qualities and emotions, these products can possess them. As other academics have highlighted, we have no reason to believe demonstration of human emotion or empathy is more than a mere simulation. As sociologist Sherry Turkle memorably described in a 2018 opinion piece, this technology presents us with “artificial intimacy” that can perform but will never compare to the depth of human inner life.

(Shutterstock)

Assumption 2: When companions, teachers, or therapists engage with children, their human qualities and traits are irrelevant.

One may assume AI systems do not actually need to possess human traits if their focus is on the practice of care, therapy or learning, avoiding the previous concern.

But features that have made human presence essential in child development relate directly to emotional life and qualities such as human empathy and compassion.

Read more:

What is intelligence? For millennia, western literature has suggested it may be a liability

Gold-standard interventions for mental health problems for children, like psychotherapy, demand human characteristics such as warmth, openness, respect and trustworthiness. While AI products can simulate a therapeutic conversation, this should not compel people to use these products.

Assumption 3: Research that proves the effectiveness of human-led therapeutic, care or learning interventions is applicable to AI-led interventions.

Social relationships that children may form with others (whether friendships, therapeutic alliances or teacher-student bonds) have proven to be beneficial through decades of human research. As noted above, many child-focused AI products may position themselves as alternatives to these roles.

However, the extent to which research about human-to-human experiences can yield any insight into benefits of child-AI relationships should not be taken for granted. We know from decades of psychological research that contextual factors like culture and how educational and therapeutic interventions are implemented matter tremendously. Given the novelty of the technology and a lack of extensive non-commercial research on child-focused AI products, we must approach efficacy claims with caution.

(Shutterstock)

The value of human presence

Early developmental periods are critical for setting children up for success in adulthood.

Social interactions with parents, friends and teachers can have profound impacts on a child’s learning, development and understanding of the world. But what if some of those interactions are with AI systems? Researchers from psychology, human-computer interaction and learning sciences are investigating these and related questions through ongoing research.

Ultimately, we don’t believe AI should be completely left out of children’s lives. Generative AI is an exciting form of AI with its conversational interfaces, access to vast information and capacity for media creation. Learning workshops such as ones conducted by MIT Media Lab

provide opportunities for children and youth to learn about data and privacy and to debate ideas about AI.

Children need human care and companionship and will always benefit from an engaged, emotional and thoughtful human presence. Empathy, compassion, and validation are distinctively human. Allowing another human to feel what we feel and say, “If I were in your place, I would feel the same way” is irreplaceable. So perhaps we should leave these situations to the people who do it best — people!