If you use social media, you’ve likely come across deepfakes. These are video or audio clips of politicians, celebrities or others manipulated using artificial intelligence (AI) to make it appear like the person is saying or doing something they haven’t actually said or done.

If you are freaked out by the idea of deepfakes, you are not alone. Our recent public opinion research conducted in British Columbia found that for 90 per cent of respondents, deepfakes are top of the list of major concerns with AI.

To say there is much hype about AI is an understatement. Media reports and comments from industry leaders simultaneously tout AI as “the next big thing” and as an existential risk that is going to wipe out humanity. This sensationalism at times distracts from the tangible risks we need to worry about, ranging from privacy to job displacement, from energy use to exploitation of workers.

While pragmatic policy conversations are happening, and not a moment too soon, they are largely happening behind closed doors.

In Canada, like in many places around the world, the federal government has repeatedly fallen short on consulting the public around the regulation of new technologies, leaving the door open for well-funded industry groups to shape the narrative and the possibilities for action on AI. It doesn’t help that Canadian news media keeps citing the same tech entrepeneurs. It is as if no one else has nothing to say about this topic.

We wanted to find out what the rest of society has to say.

(Comuzi/Better Images of AI), CC BY

Our AI survey

In October 2024, we at the Centre for Dialogue at Simon Fraser University worked with a public opinion firm to conduct a poll of more than 1,000 randomly selected B.C. residents to better understand their views on AI. Specifically, we wanted to understand their awareness of AI and its perceived impacts, their attitudes towards it, and their views on what we should be doing about it as a society.

Overall, we found British Columbians to be reasonably knowledgeable about AI. A majority were able to correctly spot everyday technologies that use AI from a standardized list, and 54 per cent reported having personally used an AI system or tool to generate text or media. This suggests that a good portion of the population is engaged enough with the technology to be invited into a conversation about it.

Familiarity did not necessarily breed confidence, however, as our study showed more than 80 per cent of British Columbians report feeling “nervous” about the use of AI in society. But that nervousness wasn’t associated with catastrophizing. In fact, 64 per cent said they felt AI was “just another piece of technology among many,” versus only 32 per cent who bought into the idea that it was going to “fundamentally change society.” 57 per cent held the view that AI will never truly match what humans can do.

Instead, most respondents’ concerns were practical and grounded. 86 per cent worried about losing a sense of their personal agency or control if companies and governments were to use AI to make decisions that affect their lives. Eighty per cent felt that AI would make people feel more disconnected in society, while 70 per cent said that AI would show bias against certain groups of people.

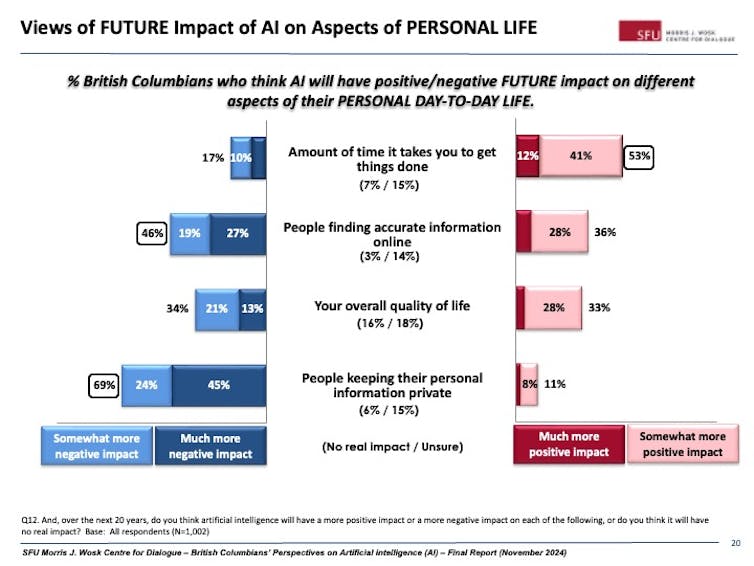

The vast majority (90 per cent) were concerned with deepfakes, and 85 per cent with the use of personal information to train AI. Just under 80 per cent expressed concern about companies replacing workers with AI. When it came to their personal lives, 69 per cent of respondents worried that AI would present challenges to their privacy and 46 per cent to ability to find accurate information online.

(Author provided)

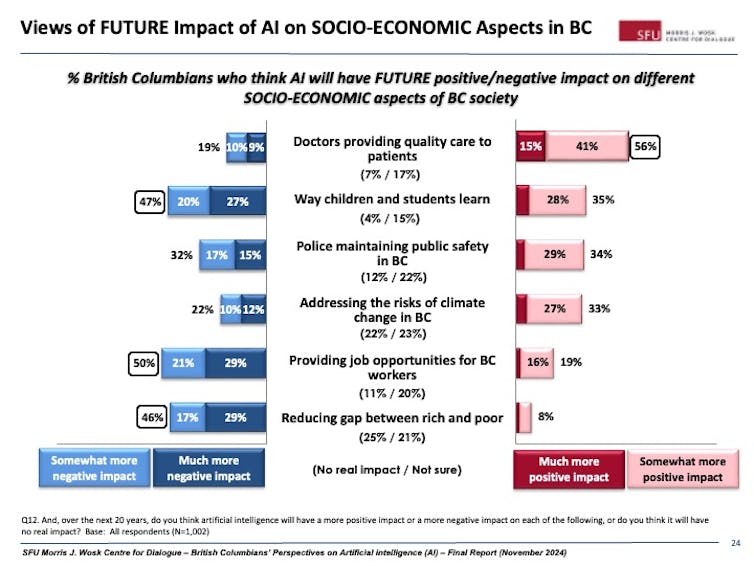

A good number did hold positive views of AI. 53 per cent were excited about how AI could push the boundaries of what humans can achieve. Just under 60 per cent expressed fascination with the idea that machines can learn, think or assist humans in tasks. In their personal lives, 53 per cent of respondents felt positively about AI’s potential to help get things done more quickly. They also felt relatively excited about the future of AI in certain fields or industries, notably in medicine where B.C. is facing a palpable capacity shortage.

If participants in our survey had one message for their government, it was to start regulating AI. A majority (58 per cent) felt that the risks of an unregulated AI tech sector was greater than the risk that government regulation might stifle AI’s future development (15 per cent). Around 20 per cent weren’t sure.

(Author provided)

Low trust in government and institutions

Some scholars have argued that government and the AI industry find themselves in a “prisoner’s dilemma,” with governments hesitating to introduce regulations out of a fear of hamstringing the tech sector. But in failing to regulate for the adverse effects of AI on society, they may be costing the industry the support of a cautious and conscientious public and ultimately its social license.

Recent reports suggest that uptake of AI technologies in Canadian companies has been excruciatingly slow. Perhaps, as our results hint to, Canadians will hesitate to fully uptake AI unless its risks are managed through regulation.

But government faces an issue here: our institutions have a trust problem.

On the one hand, 55 per cent of respondents feel strongly that governments should be responsible for setting rules and limiting risks of AI as opposed to leaving it to tech companies to do this on their own (25 per cent) or expect that individuals develop the literacy to protect themselves (20 per cent).

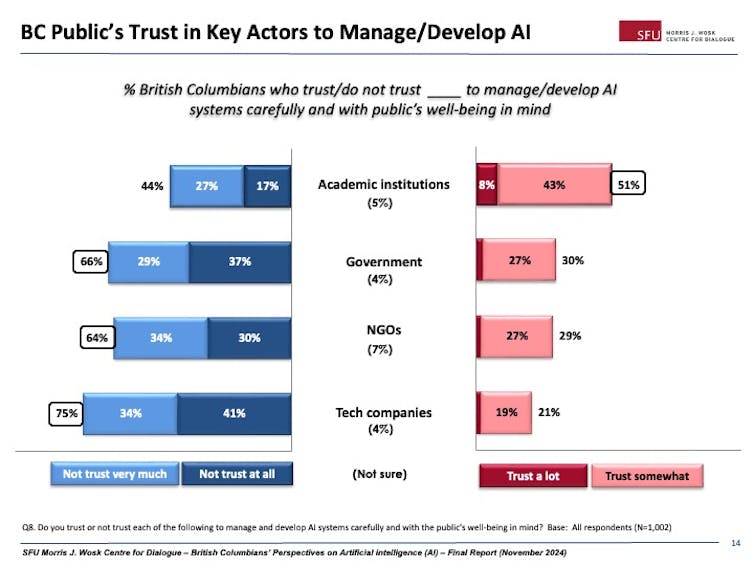

On the other hand, our study shows that trust in government to manage and develop AI carefully and with the public’s interest in mind is low, at only 30 per cent. Trust is even lower in tech companies (21 per cent) and non-government organizations (29 per cent).

Academic institutions do best on the trust question, with 51 per cent of respondents somewhat or strongly trusting them to manage this responsibly. Just over half is still not exactly a flattering figure. But we might just have to take it as a call to action.

(Author provided)

Toward participatory AI governance

Studies on AI governance in Canada repeatedly point to the need for better, more transparent, and more democratic mechanisms for public participation. Some would say the issue is too technical to invite the public into, but this framing of AI as the domain of only the technical elite is part of what is holding us back from having a truly societal conversation.

Our survey suggests residents are not only aware of and interested in AI, but express both a desire for action and for more information to help them continue to engage with this topic.

The public may be more ready for the AI conversation than it gets credit for.

Canada needs federal policy to help ensure the responsible management of AI. The federal government recently launched a new AI Safety Institute to better understand and scientifically approach the risks of advanced AI systems. This body, or another like it, should include a focus on developing mechanisms for more participatory AI governance.

Provinces also have room to lead on AI within Canada’s constitutional framework. Ontario and Québec have done some of this work, and B.C. already has its own guiding principles for approaching AI responsibly.

Canada and B.C. have a chance to lead a highly participatory approach to AI policy. Convening bodies like our own Centre for Dialogue are keen to assist in the discussion, as are many academics. Our study indicates the public is ready to join.

The post “Survey on AI finds most people want it regulated, but trust in government remains low” by Aftab Erfan, Associate Member, School of Public Policy & Executive Director, Morris J. Wosk Centre for Dialogue, Simon Fraser University was published on 12/05/2024 by theconversation.com