Consciousness is a subjective, personal thing, so scientists who study it usually rely on people to tell them what they are conscious of. The problem is that infants are presumably conscious but, without speech, they can’t let us know. Animals like dogs, cats, octopuses and maybe insects might also be conscious, but they can’t tell us that either.

There are even problems with studying adult human consciousness. People might not be fully authoritative about their own consciousness. Some philosophers and scientists think that awake, attentive adults might be conscious of much more than can be put into words.

Because of these issues, academics, both in science and philosophy, have recently developed new ways of measuring consciousness.

For example, researchers have developed the “natural kinds” approach to consider consciousness in babies and infants. This involves looking at the brains and behaviour of adults when they’re conscious of something, and using this to make a list of all of the behaviour and brain patterns we have when we’re conscious of something, that aren’t there when we aren’t conscious of it. Researchers call these “markers” of consciousness.

Patterns of brain activity called “event-related potentials” can be detected using brain scans. Scientists use this technique to identify brain patterns that are present when we consciously see something, but not when our awareness of that thing is subliminal. Some scientists think these patterns can also be found in infants, and even that this activity gets stronger as the infant gets older.

But developmental psychologist Andy Bremner and I have suggested we shouldn’t rely on just one marker of consciousness. If lots of markers all tell us that an infant is conscious, then we can be more confident in our conclusions about infant consciousness. Other potential markers that might work for infants include the ability to pay attention, and to remember an adult’s action and then imitate that action later.

Animal consciousness

Animals are another challenge to the study of the mind. Most people think animals like chimps and dogs are conscious, but how far away from humans on the tree of life does consciousness go?

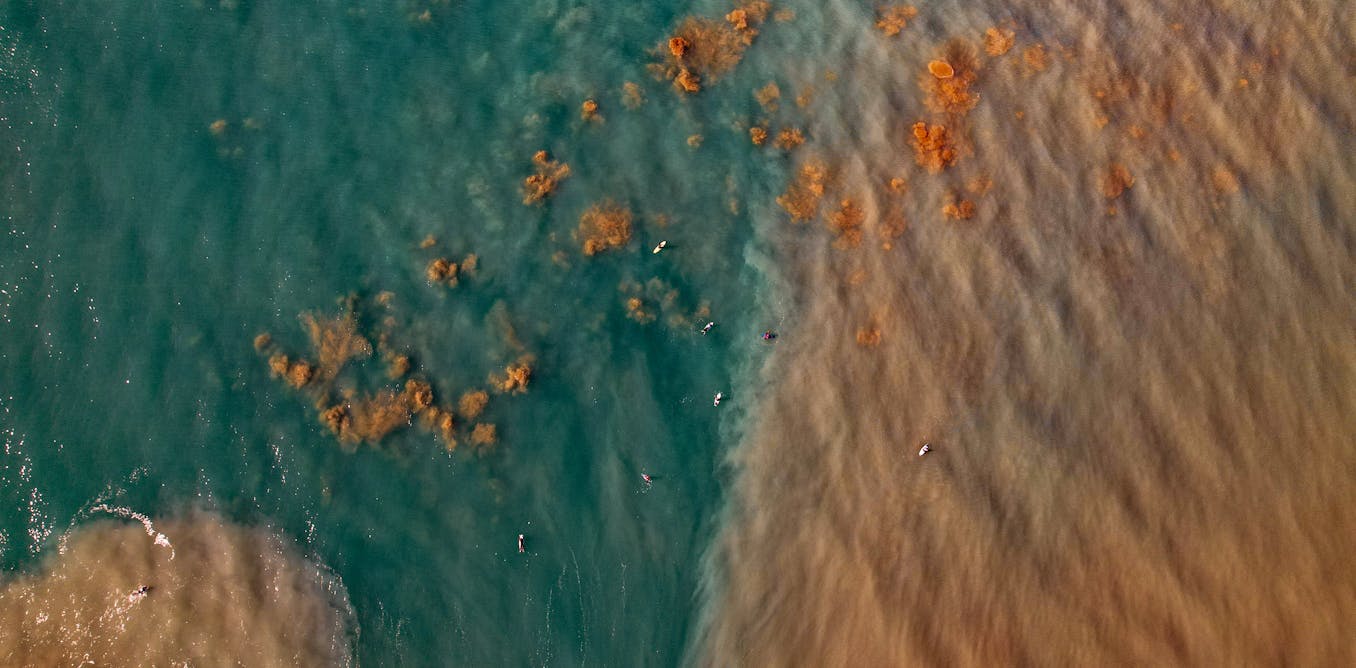

There is solid evidence that octopuses can experience pain. They will groom an injured arm, and avoid locations where they have had unpleasant experiences. But the evidence for consciousness in insects like bees or ants is less clear. How would we ever know? These questions are especially pressing, since they have consequences for our treatment of animals.

mairaali26/Shutterstock

Some philosophers have suggested we should tackle the issue of animal consciousness using the “theory-light approach”. We can’t just take the “correct” theory of human consciousness and then use it to decide which animals are conscious, because no one knows what the correct theory of human consciousness is. A 2022 review listed 22 different scientific theories of consciousness, and there are lots more. These theories disagree with each other in lots of ways.

The theory-light approach involves looking at all of the scientific theories of consciousness and working out which ideas they do agree on. Then, we can use these to work out which animals are conscious.

For example, many scientific theories of consciousness agree that being conscious of something makes it easier for the organism to think about that thing. According to this idea, the job of consciousness might be to serve as a link between an animal’s sensory perception of something, and its more high-level cognitive abilities.

This research on baby and animal consciousness raises the question of whether AI systems, including robots, could be conscious. The ever-increasing presence of robots in our social and cultural lives forces us to reckon with difficult scientific questions about how similar their minds are to ours.

There seems to be a tantalising link between animal consciousness and robot consciousness. One approach in robotics draws inspiration from swarming insects like ants and bees. After all, if you want to design a group of robots that can investigate a lot of terrain, you should look at organisms that have been doing it for millions of years. But if scientists and philosophers ultimately discover that these insects are conscious, then there will be questions about whether we need to say that the robots based on them are too.

When it comes to whether AI systems, including robots, can be conscious, we run into an old philosophical debate which has raged since before the modern surge in AI, and shows no signs of going away.

On one side of this debate, some researchers claim robots cannot be conscious because they do not have biological brains. This side emphasises that the only consciousness we can be sure of is found in biological organisms, so there’s no reason to think that robots are capable of it.

The other side of the debate says that, if AI systems like robots become sufficiently similar to biological creatures, then we should say that they are conscious even though they are metal and wire rather than flesh and blood. Until this philosophical debate can be resolved, we will not know whether AI and robot consciousness is possible.

The post “Babies and animals can’t tell us if they have consciousness – but philosophers and scientists are starting to find answers” by Henry Taylor, Associate Professor, Department of Philosophy, University of Birmingham was published on 08/19/2024 by theconversation.com

-2.png)