Melbourne-based publisher Black Inc Books is seeking to partner with an unnamed AI company or companies and wants its authors to allow their work to be used to train artificial intelligence.

Writers were reportedly asked to permit Black Inc the ability to exercise key rights within their copyright to help develop machine learning and AI systems. This includes using the writers’ work in the training, testing, validation and subsequent deployment of AI systems.

The contract is offered on an opt-in basis, said a Black Inc spokesperson, and the company would negotiate with “reputable” AI companies. But authors, literary agents and the Australian Society of Authors have criticised the move. “I feel like we’re being asked to sign our own death warrant,” said novelist Laura Jean McKay.

Such partnerships between AI companies and publishers might soon become more common. Non-fiction authors, in particular, should be worried. Writers of fiction should also be concerned, though it’s likely there will always be a market for original works of fiction written by humans.

Large language models are essentially probability-driven algorithmic language systems. And they continue to get exponentially better. This has huge implications for authors.

What are publishers doing?

Publishers are trying to partner with AI companies the way the music industry and other content industries did with Spotify, Apple Music, Vevo, Facebook, TikTok, Google, YouTube and the like.

When the latter companies came along, they were seen as threats to established copyright interests. Licensing solved a lot of problems – by permitting use to occur in exchange for a recurrent fee. While there have been disputes about the size of the licensing fee, as in the controversy around Spotify and musicians’s royalties, the licensing solution has largely allowed the content industries and disruptive new technologies to co-exist.

In theory, the licensing solution should hold true for authors, publishers and AI companies. After all, a licensing system would offer a stream of revenue. But in reality there might just be a trickle of income for authors and the basis for providing it under existing laws might be quite weak.

Authors and publishers are depending on copyright law to protect them. Unfortunately, copyright law works in relation to copying, not on the development of capabilities in probability-driven language outputs.

Billion Photos/Shutterstock

Data crunch

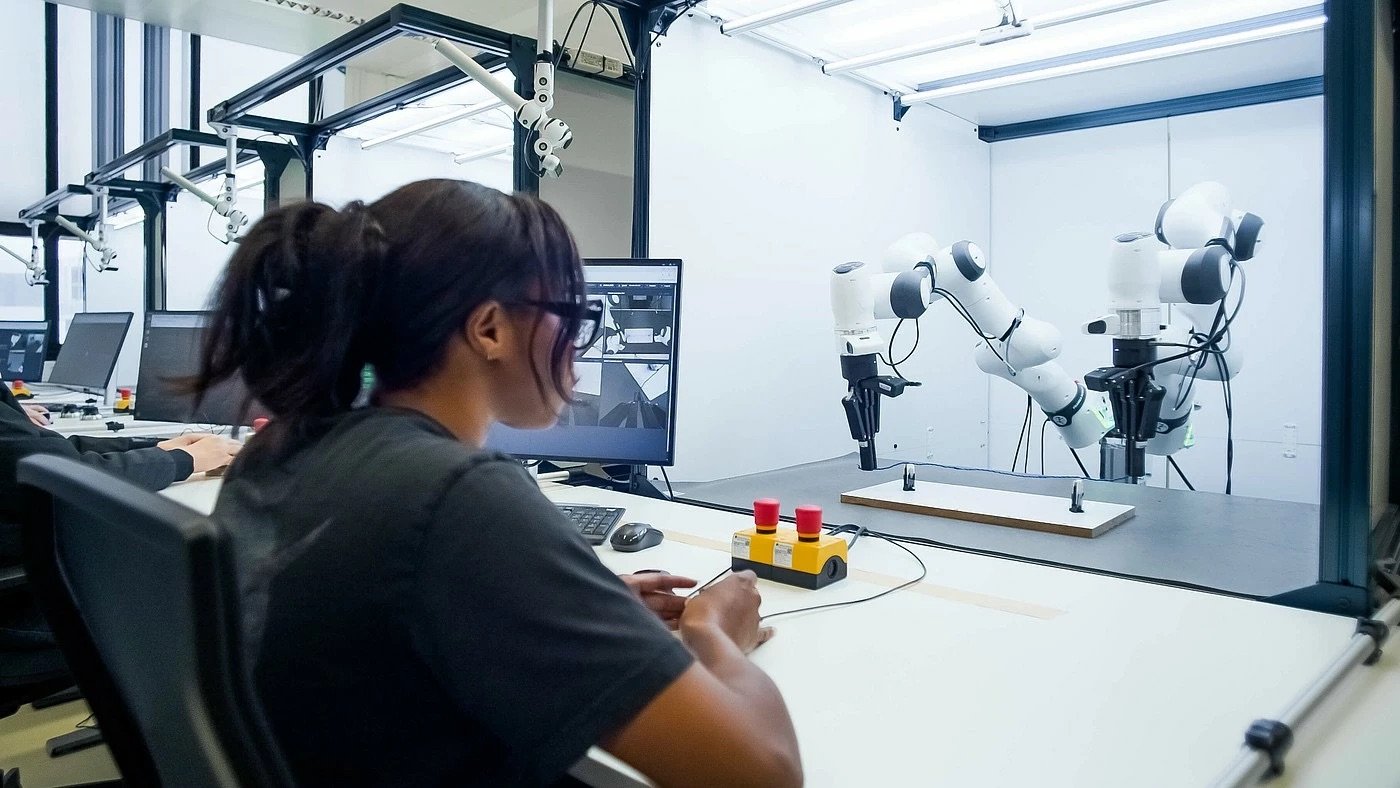

AI systems need immense amounts of data and a lot of training to get anywhere near the standard required to replicate or “replace” human authors.

In the first phase of the AI revolution, AI companies appear simply to have gone and hoovered up content that was copyright-protected. Now the publishers and organisations collecting fees on behalf of copyright owners are fighting back.

But the copyright disputes between AI companies and publishers are far from over. A group of news organisations in Canada is currently suing the maker of ChatGPT over copyright infringement. In the United States, The New York Times versus OpenAI case is ongoing.

At this stage, anything could happen with copyright law, because a superior court has not yet handed down a definitive judgement in an AI copyright infringement case. While governments, including Australia’s, are looking at the issue, there is no binding international consensus on the rules.

There is a race to set the rules around AI and the European Union is leading that race. This is why US vice-president J.D. Vance was so keen to shut down the possibility of EU regulation expanding to other jurisdictions.

Nevertheless, the bigger issue is data. Put simply, AI systems need human beings to write and publish their writings so they can have data to learn from. No human writings, no usable data.

It thus makes sense for AI companies to partner with publishers. It obviates needless copyright disputes and provides access to data.

But does it make sense for writers to partner with AI?

What about copyright law?

Copyright protects expression. Copyright law works by deciding who can and cannot copy that expression, and under what terms and conditions.

There is certainly copying being done in the development of AI systems. However, it is far from clear whether any actual copying happens after the AI system has learned from the data.

Black Inc is essentially asking for a contract variation with its authors. According to the Guardian, the publisher will split the net receipts from AI deals with the author 50/50. Black Inc’s head of marketing and publicity Kate Nash has said,

We believe authors should be credited and compensated appropriately and that safeguards are necessary to protect ownership rights in response to increasing industrial automation.

But while publishers have spoken a lot about licensing, they are a bit vague on why the AI companies would need to keep paying them. AI tech is concerned with the development of capabilities. It does not operate on the basis of continued copying of protected material. Thus, it is not like music on YouTube or Spotify or other creative content on Google.

To put it another way, once the AI has learned how to write, it has acquired that capability.

It is true AI can be manipulated to produce output that reflects copyright protected content. But tricking the AI into reproducing protected content via the cunning use of prompts is not the ordinary way the system runs, so it is a weak basis for a copyright infringement claim.

Ultimately, as McKay suggests, the problem is that AI systems might out-compete some authors. That will hurt AI companies because it diminishes the supply of available data. It will devastate authors because they will lose their livelihoods. It will damage publishers because they need authors and the AI companies with whom they wish to partner ultimately might not need them.

This is a problem industry cannot solve. It is why we have governments, which now need to step in and work out a set of rules creating a workable system of innovation and rights.

The post “Black Inc has asked authors to sign AI agreements. But why should writers help AI learn how to do their job?” by Dilan Thampapillai, Dean of Law, University of Wollongong was published on 03/06/2025 by theconversation.com